If we do not work together, we will not survive

discussion on hackernews

For most of history that sentence meant “humans must cooperate with each other.”

Today it quietly means something else: our teams will not survive complex work unless humans and AI agents learn to think together, inside a shared knowledge plane instead of a mess of isolated tools.

Right now, most engineering teams are sleepwalking into the opposite. Just open X/twitter to experience an avalanche of solopreneurs rocking the boat and everyone believing that single-man businesses is the way to go.

The mess we’re building

Every team I talk to is running the same experiment.

- Individual developers wire up their favorite coding assistant or local agent.

- PMs have a research bot.

- Ops has something glued into Slack.

- QA is running a sandboxed tester on each PR.

- Designers are prototyping frantically in their own tools.

- A skunkworks group has built a “multi-agent orchestrator” with its own vector store.

Each agent shows local productivity gains, but none of them share a world.

There is no common, persistent memory where agents and humans see the same work, the same decisions, the same scars. Everything important gets re-explained from scratch, over and over, to each new agent instance. Context repetition becomes a hidden tax that scales superlinearly with team size and agent count.

In traditional software, we would never tolerate each microservice having its own private, undocumented database that no one else can query. In 2026, this is exactly how we are deploying AI into organizations.

We call it “AI assistants,” but architecturally it’s closer to giving each employee a private, amnesiac intern.

And yes – some CTO’s are slapping more SKILLS.md and docs/ folders everywhere but a file based system just doesn’t cut it as you know what I heard from a dev: “I ain’t got time to review the docs of the AI anymore”.

What the research says: distributed cognition

There is a different way to think about this, and it already exists in the literature: “distributed cognition“.

The core idea is simple: cognition does not live only inside individual heads. It lives in systems of humans, tools, and shared representations that support joint work. Whiteboards, shared docs, git history, incident runbooks. These are all external structures that change how the group thinks.

Recent work on human–AI teams makes this explicit. Studies of AI in collaborative tasks show that AI systems actively reshape the “cognitive fabric” of teamwork by co-creating language, attention, and shared mental models with humans. They introduce the notion of AI’s “social forcefield”: the way AI-generated language and suggestions alter how team members talk, what they focus on, and how they coordinate, even after the AI is gone.

One implication is critical: if you let AI into your organization, you are already changing the distributed cognitive architecture of your teams. The only real question is whether you will do this intentionally on a shared plane, or whether you will let it fragment into opaque, un-auditable silos.

Multi-agent systems: shared memory wins

The same theme is emerging in multi-agent systems research.

Experiments with shared memory architectures show that when agents can read and write to a common memory pool, they not only solve tasks more accurately, they do it with significantly lower resource usage.

One recent framework on “collaborative memory” demonstrates that multi-agent setups with shared memory maintain higher accuracy and cut resource usage by up to ~60% in overlapping-task scenarios compared to isolated memory baselines. [arxiv; sigarch]

I picture it like this: researchers are building “agentic knowledge graphs” where multiple agents coordinate by leaving structured information for each other in a shared graph, instead of trying to pass everything through prompt text or direct messaging. This allows specialized agents to collaborate by composing over a persistent, queryable representation of what the system already knows.

What hype me is summed as: “distributed cognition on the human side, shared memory on the agent side”

They are pointing to the same architectural move: co-construct a shared representational space where humans and agents both read and write.

Why this becomes a survival issue for engineering orgs

This is where the “we will not survive” part stops being rhetorical.

Organizational research on AI in the workplace is converging on a few patterns: AI-augmented teams outperform others when they design collaboration around shared knowledge and transparent coordination.

Meanwhile naive automation that ignores human workflows can erode trust and performance. Studies on collaborative AI show that AI is most beneficial when it complements human capabilities and connects into team-level knowledge, not when it acts as isolated, opaque tools.

Now overlay that with what is happening in software development:

- Codebases are growing in size and complexity.

- AI coding tools are delivering 20–40% productivity gains per developer when used effectively.

- The number of agents and tools per team is exploding.

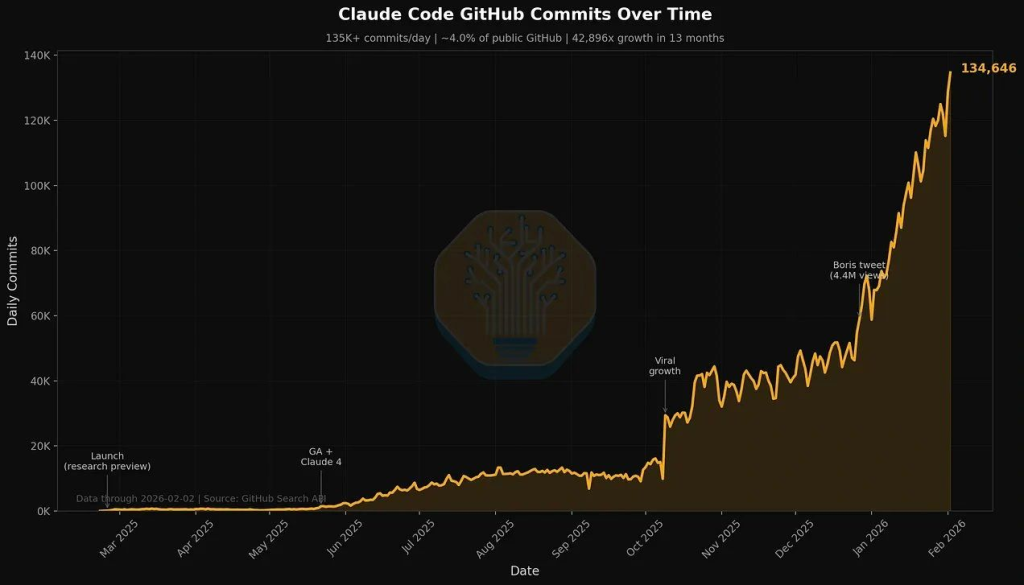

Look at this crazy graph. I don’t know about you my 100% of my commits are co-authored with Claude Code.

If you keep adding uncoordinated agents, you get escalating re-explanation cost, divergent “shadow knowledge bases” and subtle misalignments in how different parts of the org understand the same system.

If your competitors instead build a unified human–AI knowledge plane, they will not just code faster, they will think as an integrated system.

They get:

- Compounding memory: every investigation, decision, and incident becomes structured knowledge that agents and humans can reuse.

- Lower cognitive load: engineers query the shared plane instead of juggling 5–10 separate tools and chats.

- Better safety and governance: leaders can audit what agents know, what they did, and why, because the knowledge is actionable and transparent.

At that point it stops being a “nice to have”

It becomes the substrate for how your organization thinks. Teams that do not make that shift will simply be outcompeted on cycle time, error rate, and learning rate over the next 5–10 years.

What a shared knowledge plane looks like

Concretely, a shared human-AI knowledge plane looks less like “another wiki” and more like an active knowledge graph that both humans and agents inhabit.

- It is MCP-native or equivalent: agents can read and write to the same graph via standard interfaces, not bespoke glue code for each tool.

- It is active: as agents work on triaging incidents, refactoring code, researching a library another agents continuously follow-up and post structured knowledge back into the graph.

- It is curated: internal agents help summarize, normalize, and link that raw knowledge into more digestible, higher-level artifacts that humans can actually use, while humans retain the power to correct, annotate, and shape it.

- It is shared: humans and agents query the exact same graph for context, decisions, and prior work, so “what do we know?” has a single operational answer.

In such a system, onboarding a new agent is not starting from zero context; it is plugging into an existing shared memory with clear access policies.

Onboarding a new human is not handing them a graveyard of documents; it is giving them a living, queryable map of what the team and its agents have already learned.

Why this year matters

We are no longer in the toy chatbot phase. Agentic systems, shared memories, and graph-based orchestration are shipping into production right now. The decisions you make this year about how your team works with AI will harden into infrastructure and habits that are very hard to change later.

If you let every team and developer bolt on isolated agents with private memories, you are implicitly choosing a fragmented cognitive architecture that will amplify confusion and technical debt as usage scales.

If instead you invest in a collaboration instead of layoffs – one graph, many humans and agents – you are choosing an architecture where every interaction makes the whole system smarter, safer, and faster.

This is why I do not think “If we do not work together, we will not survive” a fluffy ethics copy. It is an architectural statement about how your organization will think, decide, and build in the age of AI.

The only real question is: will your humans and your agents learn to think together on a shared plane, or will you drown in the silos you are building today?

This is exactly the existential problem we are trying to solve with knowledgeplane.io: a shared plane where humans and AI agents operate over the same active knowledge graph.